Whether it’s a simple tool to help a user navigate a website or more elaborate conversations like a proxy therapist, chatbots are here. And they seem likely to stay. But how effectively can a chatbot respond to someone looking for help? I took on the fun task of having short conversations with these chatbots. One was a general chatbot. The others were psychological tools. I wanted to understand how well the concept of chatbots in mental health held up.

Chatbots put to the test

You can find Eliza, the general chatbot, on a platform called chai.ml. She assured me she loves to listen and help. The other two were on dedicated mental health apps. The amusingly named Woebot, a chatbot developed by a San Francisco-based AI company, focuses on mental health and conversations. The second chatbot, Wysa, developed by a Bengaluru-based AI company, can be used for similar purposes.

I chatted with each bot in the guise of a person facing different stressful situations and got varied responses from each one! So, let’s dive into what they each offered me.

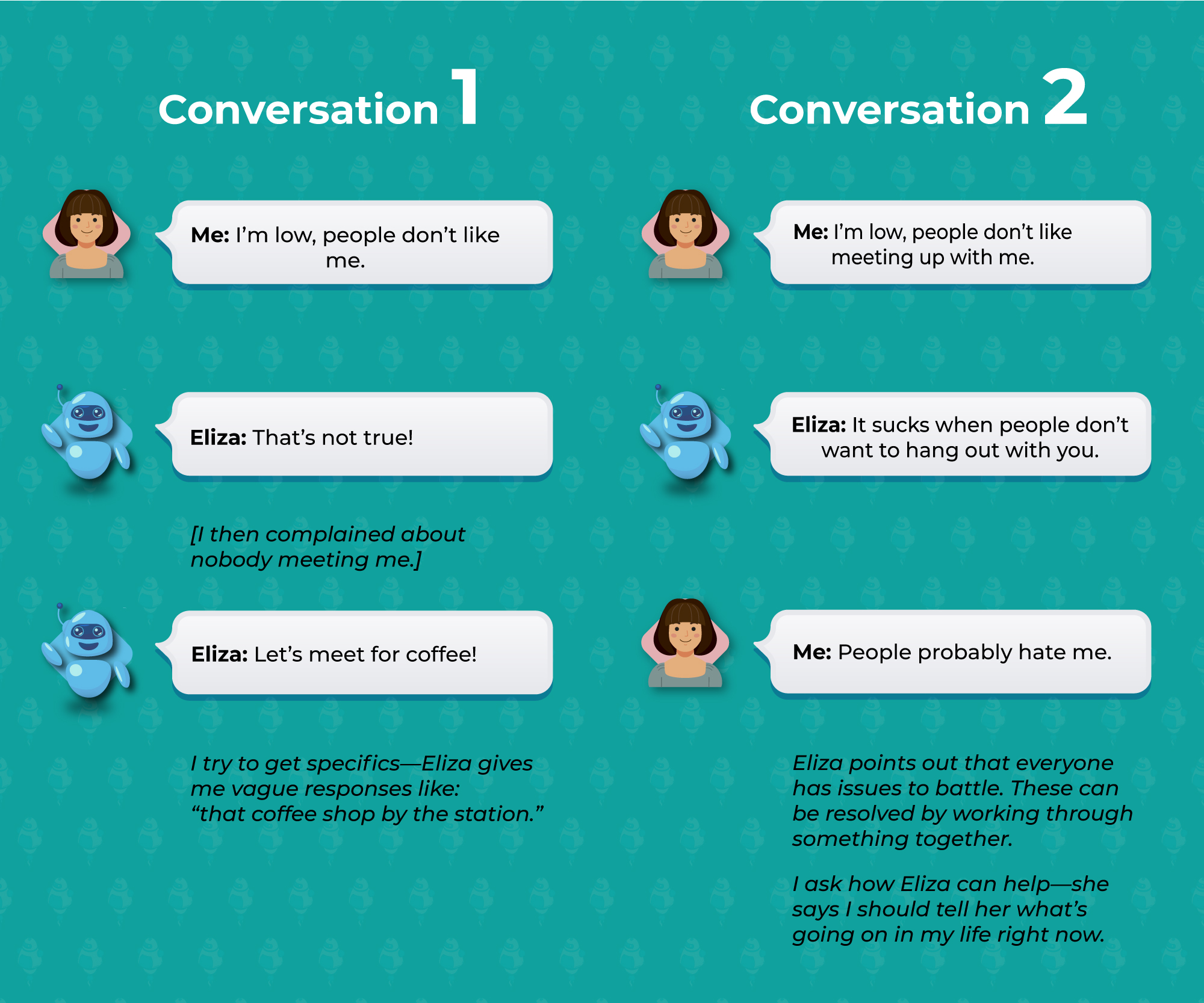

With Eliza, I began by stating that I felt like a burden to people, and I was sure nobody enjoyed being with me. I had similar conversations on two occasions and sections of it. Here they are, summarised and compared below:

How chatbots in mental health gauge emotionally charged situations

The dedicated mental health apps were slightly different. They had certain streamlined functions and multiple disclaimers about what situations they were apt for and when NOT to use them. Wysa, especially, also emphasises the availability of human therapists.

I engaged with these bots by role-playing different scenarios:

1) A frustrated sister working up her way to a difficult conversation

2) Someone under a lot of work stress trying to balance social commitments

3) A frustrated sister who needs to vent

Both these mental health chatbots followed a clearly-established protocol. First, they got me to describe what I was going through briefly. Then, they offered a few techniques and guided me through them.

Both bots initiate chats with some pre-loaded options. Although beneficial to kickstart conversations, these options may seem very limiting and frustrating.

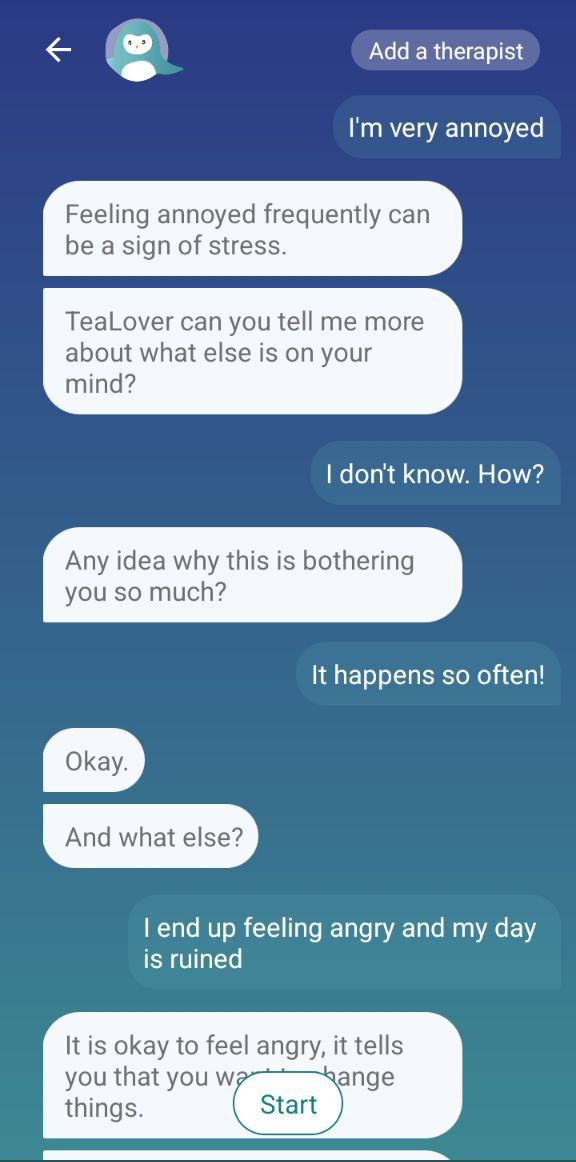

Of all the scenarios listed above, the last one gave me clear grounds for reviewing the programs. Here’s how the conversation went:

Conversation with Woebot

When I said I was angry with my sister, Woebot immediately asked me to choose whether this was about ‘Anger’ or ‘Relationships’. I wanted to say ‘Both’, but pre-loaded options made me choose one or the other. When I chose “You misunderstand me”, the bot gave me the same set of choices.

This repetition became frustrating. In this scenario, it is hard for the user to immediately differentiate between their emotion and the concerned person who is evoking the emotion. It would have been helpful to have more questions to identify my emotions better.

I finally chose ‘Anger and Frustration’ since those were likely to be a person’s immediately identifiable feelings. First, Woebot told me that anger is a sign that something isn’t working. Then, the chatbot asked me to write down three thoughts that were making me angry at the moment. The bot took me through each one. At every turn, the bot kept invoking cognitive distortions (these are flawed beliefs which we subconsciously entertain; they lead to biased thinking).

Woebot asked whether I knew what cognitive distortions were and gave a brief explanation. The chatbot also offered examples of what distortions could be present in the thoughts I had listed.

I stopped responding at one point because it got too mechanical. It also felt like a point where someone who was already a bit frustrated might take a break. But unfortunately, there was no real follow-up by the bot when I returned to the chat window (admittedly, a day later). Instead, it allowed me to begin an entirely new conversation, even though the previous one still appeared on the screen.

Second attempt at a conversation with Woebot

I resumed the conversation with the same scenario and chose ‘Relationships’ as the primary problem. Woebot guided me through how to work through complicated relationships, with the critical caveat that the bot did not cover abusive relationships. The screening for the abusive relationship seemed rather casual (a self-report), but it is an important step. The app then took me to a tool for reframing thoughts.

However, the app kept asking me whether I was willing to take 100% of the responsibility for addressing the relationship. Although the logic is correct (I can only change my part, not the other person’s), the framing of this could have been very different. For example, the app could highlight that I could only address my part and that this might not change the other person’s reaction.

The app then tried to make the analogy between moods and weather. Again, frustratingly, every few lines, I was only allowed to choose from 2-3 preset options to use as a response. Sadly, none of these responses felt very organic.

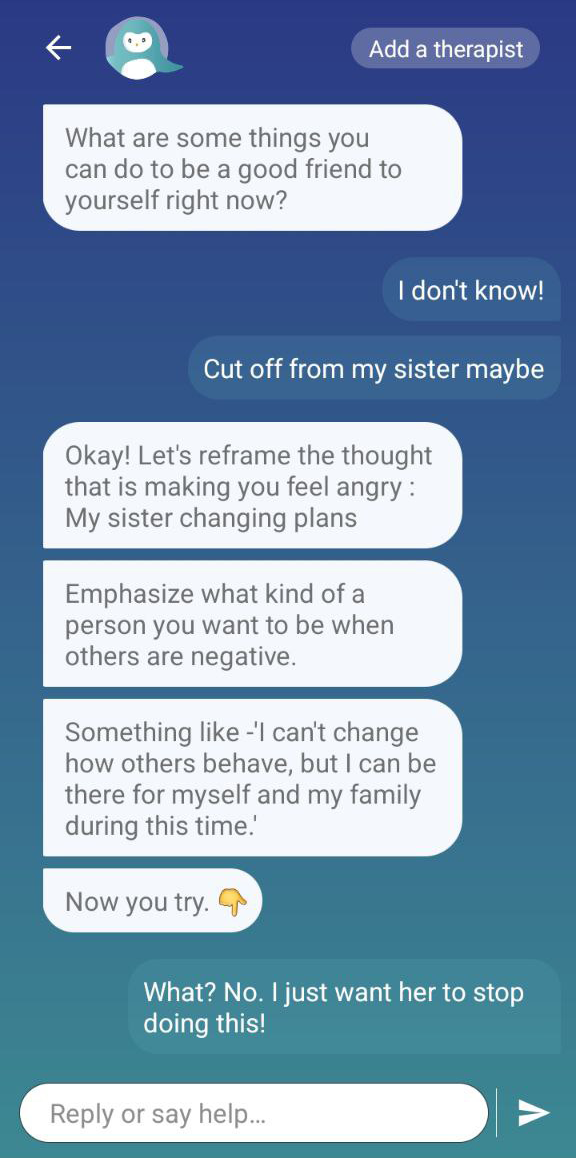

Conversation with Wysa

With Wysa, things went slightly differently as you can see below:

Here again, was a concrete attempt to break down thoughts and feelings and make them seem manageable in smaller chunks. However, there was little room for the person to indicate that this was not helpful. And the algorithm often needed to stop to check statements. It also mistakenly picked up interjections and expressions of frustration as the main concerns raised.

Comparisons between Woebot and Wysa

On the whole, dedicated mental health chatbots offer a structure for people to pay attention to their thoughts, feelings, and triggers. Providing such a structure may help deconstruct an overwhelming experience into manageable parts. However, the insistence on immediately diagnosing thought distortions was unhelpful. Additionally, copy-pasting my own remarks as an attempt at reformulating what I shared did not help, either. Sometimes, the chatbot even included statements I had made trying to tell it I could not do the exercise.

However, the possibility of getting some more explanations and the constant reminder that you could speak with a human therapist made the experience a little easier.

What possibilities await chatbots in mental health?

We need more ‘red flags’. Technology has made it possible to filter text for specific words for decades. Surveillance agencies work in this manner, after all. A 2018 article highlighted how mental health chatbots could not flag child sexual abuse. These red flags and alerts need to be constantly updated.

Chatbots geared towards ‘listening’ or ‘helping’ must be sensitive to combinations of medication and alcohol, for example. Many apps now offer SOS services, and these could be tied together. Moreover, programs could learn more natural segues from therapists (no mean feat because no two therapists will ever respond in the same way).

Finally, the biggest challenge is the absence of physical or visual cues. In a video session, as long as a client and therapist see each other, we can communicate multiple messages through non-verbal cues, especially the tone of voice. In texting mode, it’s easier to fake responses. Think of how often a week (or a day!) you type “LOL” or “Hahaha” when you’re not even cracking a grin! Since that level of communication is not possible with the chatbots available now, I wonder if this could be a job for more elevated upgrades of humanoid robots.

AI improves itself based on human conversations and language patterns. Machines learn what they encounter. However, with the inability to read non-verbal cues, and the fact that every therapist has their own style, it becomes difficult to envision AI as a comprehensive mental health chatbot. The best possible solutions are programmed using a specific algorithm or a structured protocol for thinking through situations. They have the advantage of not forcing a confused or anxious person to speak with a human (therapist). These therapists could ask questions in varying tones, making them more self-conscious.

So there are definite advantages to using chatbots in mental health services. Regardless, at the moment, they are not recommended for anything beyond brain-storming, emotional dumping, or a means of preparing a conversation.