Remember when Forrest meets and shakes hands with President Kennedy in Forrest Gump? Let’s consider that the predecessor of deepfake technology. There is so much more material to work with today. Every photo dump online adds to data on the Internet. Celebrities feature in movies, interviews, and press junkets that can be used to create any number of videos. Now, with deep learning technology, you can put anyone in any video, making it seem like they’re doing anything.

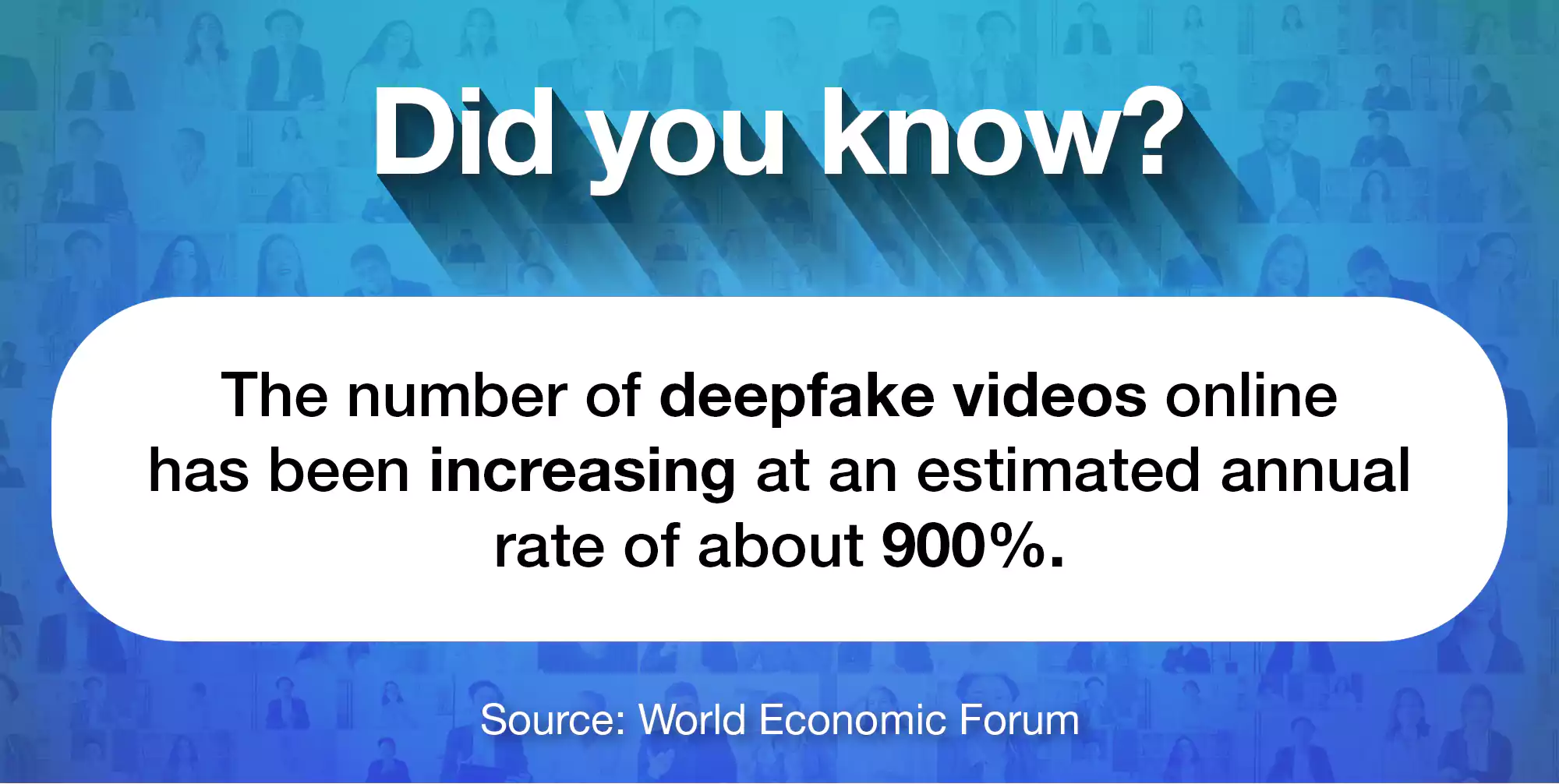

If you haven’t heard of it before, deep learning is a technology that teaches computers to mimic the workings of a human brain. This technology uses autoencoders and Generative Adversarial Networks (GANs), which are algorithms that refine AI-made art, to create amusing effects. However, when overlaid upon existing media, these effects give rise to synthetic media. The result is deepfakes, or AI-based audio/visuals, which are more realistic and, therefore, more challenging to detect the original from the fake. And now, deepfake videos are taking over advertising, giving rise to many questions and concerns.

The restorative powers of deepfake technology

Deepfake commercials can be as unrealistic as the Cred Bounty commercial with deepfaked Putin and Modi or as realistic as the Russian commercial starring Bruce Willis. Willis, who had effectively retired from the industry because he suffers from aphasia, essentially sold his face to a firm specialising in deepfake technology. So, even though he isn’t physically acting, the company can use his likeness for many applications, and he can continue earning. James Earl Jones, too, will continue to be the voice of Darth Vader in the Star Wars movies without recording any new content, thanks to voice cloning. Also, for Star Wars, a deepfake Carrie Fisher posthumously reprised the actress’ role as Princess Leia with her daughter’s permission.

Filmmakers can also create convincing translations of films featuring the original actors, speaking that language with their own voice. Therefore, this technology has the potential to remove the inconvenience of dubbed audio or having to shoot multiple times for each language.

Then, the question arises: how do we know whether the subject in the deepfake has given their permission for an AI-powered overlay?

Deepfake technology and the dismissal of consent

It is possible to create content without the approval of the celebrity being ‘imitated’. Suppose a celebrity sues a small business over using their likeness or voice. In that case, it could just end up giving the company publicity they would probably appreciate. So, what does a public figure who has been deepfaked do? If celebrities react to a seemingly harmless video showing their likeness, they will be bad sports. There is even the possibility that cancel culture could threaten them. However, if they don’t react, it could lead to a proliferation of such videos, affecting their public image, commercial dealings, or events like elections and mergers.

There have always been doppelgängers in advertising, like the Arbaaz Khan and Madhavan lookalikes from the ‘Five Star’ candy bar ads in India. They are not, however, similar to the deepfake ads featuring Elon Musk and Leonardo Dicaprio, which are also doing the rounds without either celebrity’s approval. What makes the latter different is that it could give the impression that these personalities are endorsing the product. However, fair use laws may even protect the video if the company treads the right line.

How is deepfake technology legal?

It seems unreal that companies could use a person’s likeness without consent and face no legal repercussions. Yet, surprisingly, it is acceptable, legally speaking, to create a deepfake ad. You only need to prove that your use of the image is satirical and that a reasonable adult can spot that it is fake. Right now, a TikTok account named @deeptomcruise, with a bio that states “Parody. Also younger.” is putting out numerous Tom Cruise deepfake videos, unendorsed by the actor.

Anyone who has footage of themselves, particularly online, can be deepfaked. So, it is not just celebrities who have to bear its consequences. While some of this is innocent, such videos could damage someone’s reputation if used irresponsibly or maliciously. As a result, celebrities can sometimes argue copyright infringement or libel/slander. Still, one’s best bet is probably The Right to Publicity, Personality Rights, or Rights of Persona as it is known, depending on where you live. This right protects against the unauthorised use of an individual’s name, likeness, or other recognisable personality traits for commercial purposes.

In parts of America, the law prohibits using deepfakes in pornography and political campaigns. The rules are still unrefined in India. There is an IT Act in place, which deepfakes violate. However, the law needs to be more explicit about this issue. Synthetic media is going to keep perfecting itself. Consequently, it is possible to violate human rights without legal measures and software to restrict the malicious or irresponsible use of this technology. Non-consensual ads use the celebrity’s star power, influence, or any number of other personality traits that they have spent years creating as part of their public image. To capitalise on such an image still poses a problem, whichever way you may argue your case—even legally.

The moral dilemma

Here’s my opinion: if you’re getting the effect of a particular celebrity’s endorsement without paying said celebrity, there’s something morally wrong with that. It seems redundant to say, but celebrities are people too. The first application of deepfake technology was in pornography, making it seem nefarious at its onset. There is no reason to treat anyone that way; it should not be the price of fame. And what of ordinary individuals? The effect of something like deepfake revenge porn in more conservative cultures could be death.

Speaking of death, ‘Re;memory’ is a service offered by South Korean startup Deepbrain AI, that creates digital twins of deceased loved ones. The data is collected while the subject is alive, and the avatar, created posthumously, has the person’s appearance and voice. CEO Eric Jang wants to explore this application further and “create a new funeral culture”. While the service probably comforts family members in their grief, it seems like the AI version of Harry Potter’s Resurrection Stone to me.

The price is right

Deepfakes may go wrong a hundred different ways, but sometimes, it’s just the right thing. Last Diwali, Rephrase.ai (an Indian startup) teamed up with Cadbury for their ‘Not Just a Cadbury Ad’ campaign. The startup used machine learning to recreate Shah Rukh Khan’s face and voice to create ads for local retailers for Cadbury’s. By scanning the QR code on the Limited Edition Cadbury bars, retailers could enter their details to be endorsed by India’s most prominent brand ambassador.

The argument for deepfake advertising is that small businesses can create advertisements at a small cost. The future of advertising is in hyper-personalised commercials—an ad on your feed that might address you directly. Based on your ethnicity, it would be easy to change the skin tone of the persona they use in the ad without hiring someone of that ethnicity.

Companies like Generated Photos are creating images of people who don’t exist and selling them for about $20 per month. All you have to do is select a few filters like ‘female’, ‘blonde’, and ‘surprised’, and download your customised avatar. You can use this avatar anywhere, including for commercial purposes, with no copyrights involved. Therefore, the company has no liability, no need for much technical expertise, and can save on hiring a casting crew. Given the current decline in the ad market, this is excellent news. Of course, it also means many people could be out of a job, but that’s another conversation.

Synthetic media is the future; the deepfakes are here to stay. There is no escaping it. A business that uses an excess of AI in its ads and customer relations without a precise and popular angle will probably find it hard to build trust with customers. But all that’s going to change, and the market will have to play nicely with the new kid. Detection systems and lawmakers need to keep up so that we can enter this new world with our dignity intact.