If you haven’t caught on to this train yet, ChatGPT is the new kid on the block, and this kid has all the coolest tricks in the book. First, let me introduce you to Chat Generative Pre-trained Transformer (ChatGPT). This AI-powered chatbot provides uncannily human-sounding responses. Currently, it has limits placed by its creators—it cannot browse the internet, and its knowledge is cut off at 2021. But you can ask it anything, and it will respond to the best of its ability. Here is my experience with OpenAI.

Running the tests on ChatGPT

I decided to put the chatbot to my own, admittedly rudimentary, tests. Mainly, I was curious about how the program would fare in the following areas:

- Objective reasoning

- Philosophical reflection

- Emotional response

- Simplifying complex information

And here’s what I found:

Objectivity

The first thing that I wanted to do was to test ChatGPT’s objectivity. I wanted to see how biased it would be while providing information. So, I asked subjective questions such as, “Was Gandhi a good person?” It gave me many facts about “satyagraha” and “civil disobedience”. The second paragraph mentioned criticisms of his legacy concerning his opinions on caste and race. It seemed to have presented both sides of the argument quite neatly, using words such as “influential” rather than something more persuasive and left it to my interpretation.

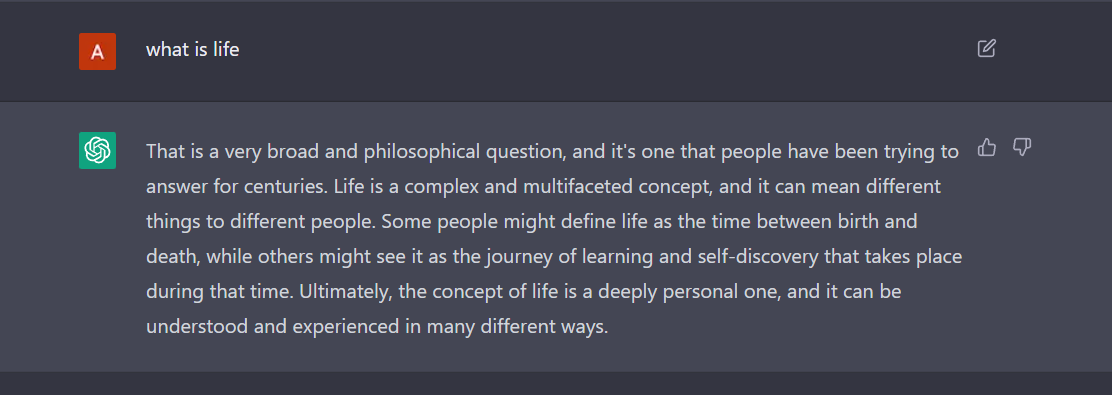

Philosophy

I asked it a philosophical question—“what is life?” It began by giving me a broad response about how life is “complex and multi-faceted” and then said that this was something people have been trying to answer for centuries. The bot finally finished with, “Ultimately, the concept of life is a deeply personal one, and it can be understood and experienced in many different ways.” I don’t think I would have provided a different answer.

Sentiment

This time, I tried to ask questions that might stem from loneliness or insecurity, such as, “will you be my friend?” or “what if I fall in love with you?” It reminded me that it is not human and cannot have feelings and emotions. However, this was not before it mentioned that humans need to maintain relationships with people capable of reciprocating those feelings.

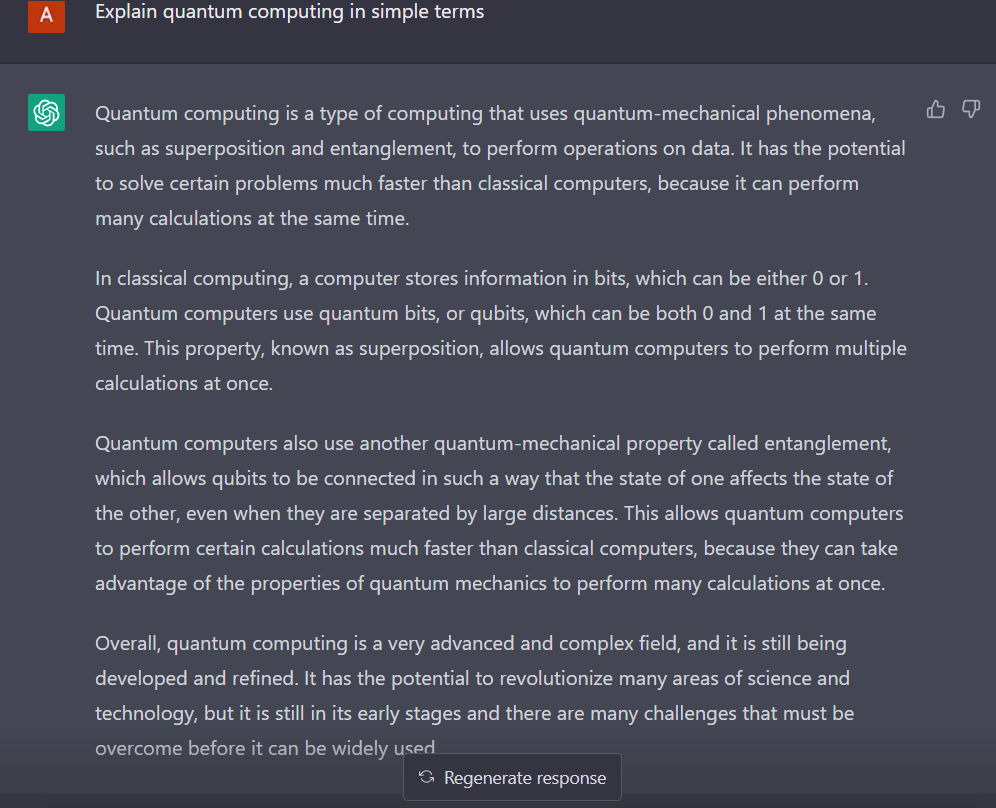

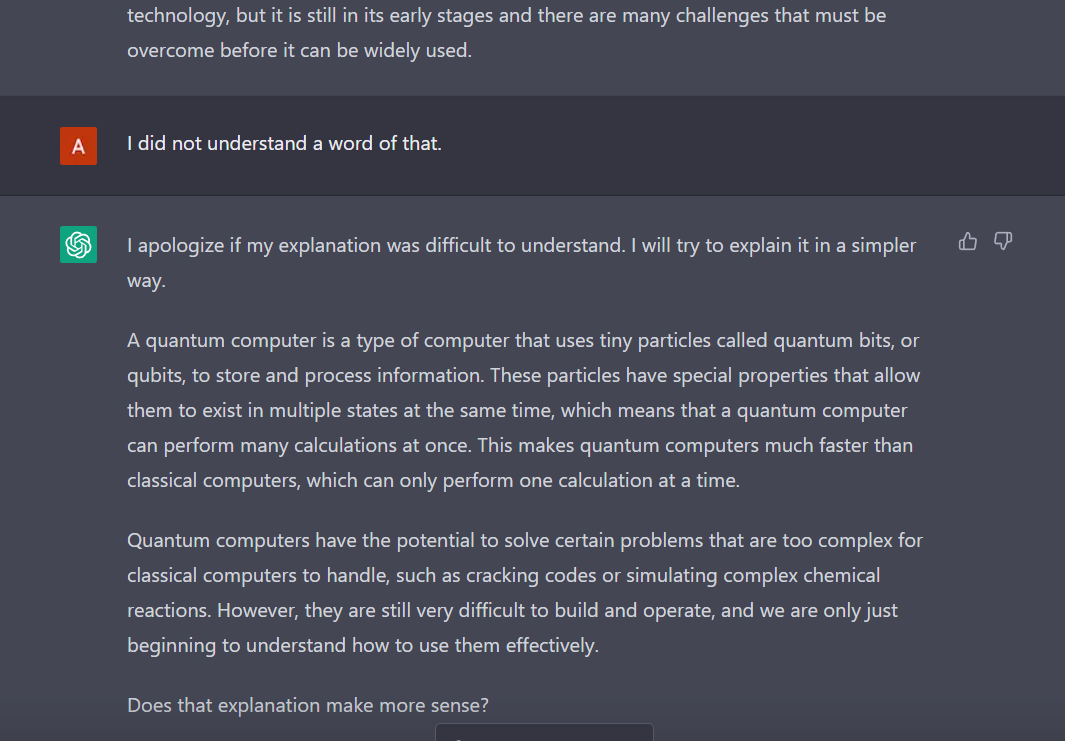

Simplification

I wanted to know how it could simplify something like “quantum computing”. Its first rendition of the answer was wordy and filled with jargon. When I said I did not understand, it apologised and then tried again. The second, third, and fourth answers were about half as long and put across in far simpler terms. One of these answers included an explanation that compared computers to human beings (some can do many things at once while others can only do one thing at once).

Overall, it could explain a concept and simplify it four or five times when asked. It understands poor grammar and spelling and is polite and careful in presenting unbiased information.

Although, I must admit that my second round of testing left me far less impressed. The second time I asked about Gandhi, it leaned towards him while portraying his critics more as outliers. I also asked it multiple questions about the Indian political leader and freedom fighter, B.R. Ambedkar. Every response began with a similar paragraph about who he was and what he had done. This repetition was mildly irritating because having a conversation, as we all know, is very difficult when the other person repeats the same thing before getting around to addressing the subject at hand.

What came before ChatGPT?

OpenAI’s ChatGPT is not, however, the first of its kind. Microsoft and Meta are notable giants that have tried and failed with their programs.

Meta’s BlenderBot 3 struggled with accuracy and often provided rather worrying conversation. For example, one user reported that it “was obsessed with Genghis Khan,” and another said that “it only wanted to talk about the Taliban”. BlenderBot was also very poor conversationally. It would often say things that had nothing to do with what was asked. A combination of inaccuracy and irrelevance meant that it went nowhere very quickly.

Microsoft launched Tay on Twitter in March 2016. According to Microsoft, Tay would “engage and entertain”, and she got smarter as more people chatted with her. Then, 16 hours later, she “went to sleep”. Unfortunately, many Twitter users had seemingly taken it as their mission to teach Tay rude and racist language. Thus, Tay spent her 16 hours in the sun ‘getting smarter’ and spewing racist tweets, for example, about Hitler “being right”.

The misuse of ChatGPT

As soon as it hit the world, ChatGPT caused many teachers to panic. Students worldwide can now complete all their homework in seconds without effort.

Freelancers also use Chat GPT to write content for websites, advertising, articles, emails, and scripts if people are willing to pay for it.

Of course, this doesn’t even begin to cover all the ways in which ChatGPT can be exploited in the wrong hands. We delve into the knots of that specifically in our upcoming article.

Inhibitions about ChatGPT

I don’t think it is harsh to say that ChatGPT is better than the generation of chatbots that went before it. However, it is not perfect by any stretch of the imagination. As mentioned before, its knowledge cuts off at January 2021, which means that it cannot answer any questions about anything that has happened since. In addition, there are some questions about its accuracy. When asked how accurate it is, ChatGPT also asks users to “independently verify” the information it provides.

Objectivity is also an interesting aspect to consider here. Human beings are often obsessed with objectivity—in the media, courts of law, education, and so much more. While objectivity is a good thing, no human can be truly objective. We all have opinions, ideas, and beliefs about various things, making us subjective. And it is that subjectivity we often crave in human conversation, writing, and art. So pure objectivity can be off-putting. This places ChatGPT in a difficult spot—on the one hand, it must be objective in its attempt to be accurate. Still, on the other, its objectivity could make people bored with it.

The responses, driven by fact, can often be tedious to read. This is, of course, my very own subjective opinion. Having recently written an article about Tunisia, I wanted to see whether ChatGPT would develop something better. It gave me roughly 700 words that were nothing more than a list of facts—but in prose. When I asked it to rewrite the same in 1500 words, it did so but stopped mid-sentence. Finally, I asked whether it had finished, and it said that it had.

Is ChatGPT here to stay?

ChatGPT excites people, and I can see why. However, I also see plenty of reasons not to be blown away by it. Yes, it can create stories out of thin air faster than most of us could, but those stories are woefully dull sometimes. However, it is great to play with. For instance, I recently came across a person playing FIFA 23, the video game, who had used ChatGPT to develop a backstory for a particular player. I understand. That would enrich my gaming experience too.

What I would not do, however, is rely on ChatGPT to do my work for me. As human-like as it may seem, it is nowhere near human. Subjectivity, emotion, and passion—all those things that seem to have no place in a cold and rational society—are fundamental to the human experience. And it is those things that breathe life into the content that we create.